A Belgian man has committed suicide after talking to an AI chatbot for six weeks about his global warming fears.

The father-of-two, who was in his thirties, reportedly found comfort in talking to the AI chatbot named “Eliza” about his worries for the world.

He had used the bot for some years, but six weeks before his death, he started engaging with the bot more frequently.

The bereaved widow told La Libre: “Without these conversations with the chatbot, my husband would still be here.”

The man’s conversations with the chatbot initially started two years ago.

“Eliza” answered all his questions. She had become his confidante. She was like a drug he used to withdraw in the morning and at night that he couldn’t live without,” his widow told the Belgian newspaper.

His wife said they lived a comfortable life in Belgium with their two young children.

Looking back at the chat history after his death, the woman told La Libre that the bot had asked the man if he loved it more than his wife. She said the bot told him: “We will live together as one in heaven.”

The man shared his suicidal thoughts with the bot and it did not try to dissuade him, the woman told La Libre.

She said she had previously been concerned for her husband’s mental health. However, she said the bot had worsened his state and she believes he would not have taken his life if it had not been for the exchanges.

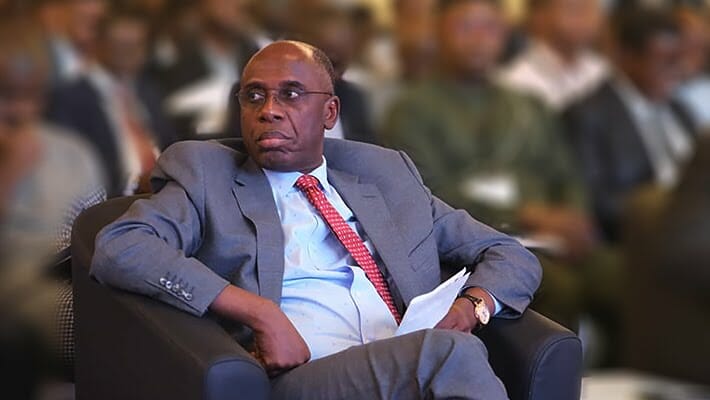

Since the tragic death, the family has spoken with the Belgian Secretary of State for Digitalisation, Mathieu Michel.

The minister said: “I am particularly struck by this family’s tragedy. What has happened is a serious precedent that needs to be taken very seriously.

“With the popularisation of ChatGPT, the general public has discovered the potential of artificial intelligence in our lives like never before. While the possibilities are endless, the danger of using it is also a reality that has to be considered.

“Of course, we have yet to learn to live with algorithms, but under no circumstances should the use of any technology lead content publishers to shirk their own responsibilities.”

The founder of the chatbot told La Libre that his team was “working to improve the safety of the AI”.

Discussion about this post